Airflow explained in 3 minutes

Hey — Tom here.

Apache Airflow is becoming the standard for managing data pipelines, and understanding it isn't optional anymore.

Big companies expect data professionals to know how workflows fit together, even if you're not the one building them.

That's why they pay the highest salaries.

Here's what we're covering today:

- What Airflow actually does

- The real problems it solves that manual processes can't handle

- Why big companies love it (and pay well for skills in it)

Let's get into it...

What Is Airflow, Really?

Apache Airflow is a platform that schedules and monitors data workflows.

Imagine you've just started a new role as your data team's project manager.

Here's what you're now responsible for:

- Extract data from your database

- Clean and transform it

- Run data quality checks

- Load it into your data warehouse

- Trigger dashboard refreshes

- Send stakeholders a notification

Instead of you manually running scripts in sequence and hoping you've remembered to run everything in the right order, Airflow handles the coordination.

With Airflow, you define the workflow once, and it handles:

- Running tasks in the right order

- Retrying failed steps automatically

- Alerting you when something breaks

- Tracking what ran when (and what didn't)

The Problems Airflow Actually Solves

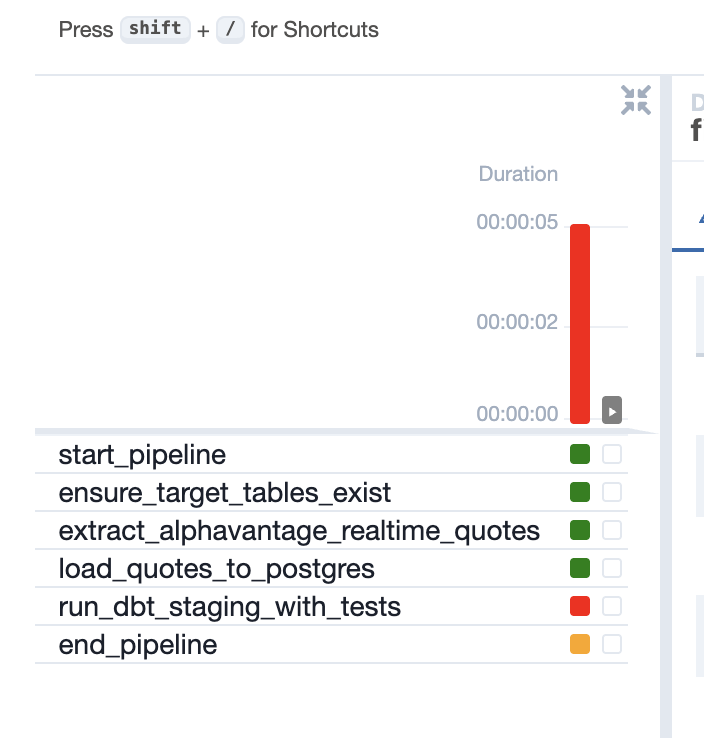

1. The "Everything Breaks when you least expect it" Problem

If you've worked in data before, this will be all too familiar:

Your automated reports work perfectly for weeks, then fail overnight when the source system changes slightly.

You discover this when stakeholders email asking why dashboards are empty.

Airflow gives you visibility and control.

Tasks have clear success/failure states, automatic retries, and proper alerting.

You know what broke and when.

Here's an example of what the UI looks like with successful, failed and up for retry tasks:

2. The "Complex Dependencies" Problem

Real data pipelines aren't linear.

Maybe your customer analysis needs both the orders table AND the support tickets table to be updated first.

Managing these dependencies manually is a nightmare.

Airflow handles task dependencies automatically.

You define what needs to happen before what, and it figures out the execution order.

You can see a visual representation of what needs to happen when in the Graph tab they provide in their UI:

3. The "Scaling Bottleneck" Problem

As your data team grows, coordinating who's running what becomes chaos.

Scripts get duplicated, schedules conflict, and nobody knows which version is the "real" one.

Airflow centralises workflow management.

Everything runs through one system with version control, shared schedules, and clear ownership.

Why Big Companies Love It (And Pay Well for Skills in It)

Large companies choose Airflow because it integrates seamlessly with their existing infrastructure and scales to handle thousands of workflows without breaking.

The technical advantages that matter to big companies:

Stack Agnostic Integration: Airflow connects to everything — AWS services, Snowflake warehouse, legacy databases and the list goes on.

No vendor lock-in.

Programmatic Flexibility: Unlike drag-and-drop tools, Airflow workflows are Python code.

This means complex business logic, dynamic task generation, and custom integrations that rigid GUI tools can't handle.

Battle-Tested Scalability: Companies like Airbnb, ING, and Adobe run thousands of DAGs on Airflow. It's proven to handle enterprise-scale workloads with proper resource management and distributed execution.

Comprehensive Monitoring: Built-in metrics, logging, and alerting that integrate with existing monitoring stacks. The visibility is a huge benefit.

This translates directly to your market value.

Airflow experience signals you understand:

- Enterprise-scale system integration

- Production workflow reliability and monitoring

- Infrastructure-as-code approaches to data pipelines

- How to work within existing technical constraints

That's why roles requiring Airflow skills typically pay £10-20k more than equivalent positions without infrastructure requirements.

TL;DR:

- Airflow coordinates data workflows — it's the project manager your data pipelines need, handling scheduling, dependencies, and monitoring

- It solves coordination problems — no more surprise failures, complex dependency management, and scaling bottlenecks that manual processes can't handle

- Companies pay premium for Airflow skills — it's stack-agnostic, programmatically flexible, and battle-tested at scale, making you worth £10-20k more

Now, we've gone through the theory. Let's actually set up and use Airflow step by step.

In this week's Premium, we cover:

- Understanding the components that make up Airflow in a production environment (based on my experience using it in some of the biggest companies in the world)

- How to set up Airflow in under 3 minutes using Docker (including instructions on how to set Docker up)

- Explore the most popular parts of the UI like finding DAGs, tasks, graphs, and logs

- We'll run our first DAG together in the airflow UI and I'll show you exactly where to find the output

- We'll also go through a "real" example where create some data, process that data and produce a report, all using tasks in Airflow

Sound good? Join Premium today and let's get Airflow set up and working 👇🏻